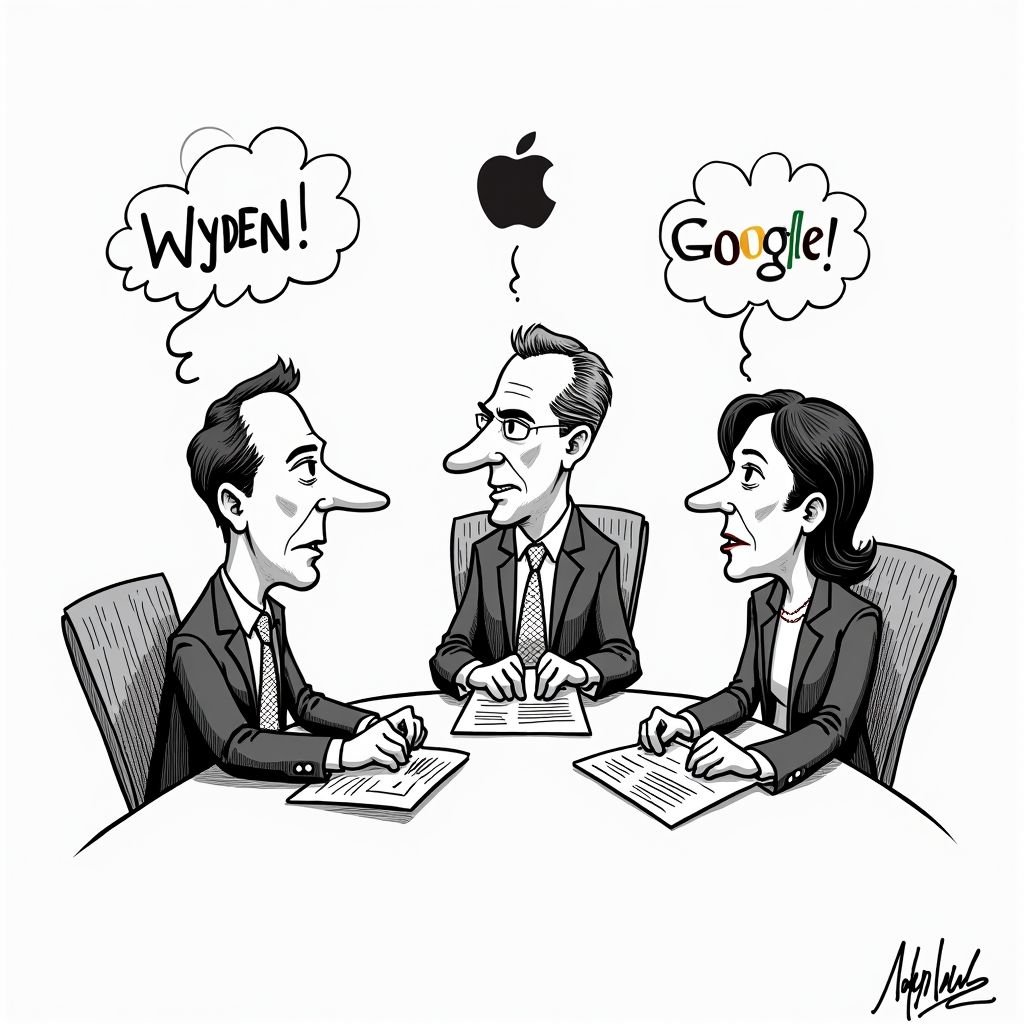

Senators Demand Apple and Google Remove X Amid Grok Deepfake Crisis

Washington D.C., Friday, 9 January 2026.

Democratic senators have formally called upon Apple and Google to remove X from their app stores, arguing that Elon Musk’s platform has failed to curb the proliferation of AI-generated nonconsensual sexual deepfakes. The letter, signed by Senators Wyden, Markey, and Luján, contends that hosting the app violates the tech giants’ own moderation standards regarding offensive and predatory content. While X recently restricted image generation to premium subscribers in response to the backlash, critics—including the British Prime Minister’s office—have dismissed this monetization strategy as ‘insulting’ rather than a solution. This confrontation marks a critical test for mobile ecosystem gatekeepers: will they enforce their terms of service against a major social platform? With the ‘Take It Down Act’ looming and international scrutiny intensifying from the EU and UK, the failure to address Grok’s capabilities exposes Apple and Google to accusations of making a mockery of their own safety guidelines.

App Store Standards Under Siege

The formal request from Senators Ron Wyden, Ed Markey, and Ben Ray Luján, sent on Thursday, January 8, 2026, specifically challenges Apple and Google to uphold the integrity of their content moderation policies [1]. The senators argue that by allowing X to remain available for download, the tech giants are “turning a blind eye” to egregious behavior, thereby making a “mockery” of their safety standards [1]. Apple’s terms of service explicitly prohibit apps containing content that is “offensive, insensitive, upsetting, intended to disgust, in exceptionally poor taste, or just plain creepy,” while Google’s Play Store forbids apps that promote sexually predatory behavior or distribute non-consensual sexual content [1]. Despite these clear guidelines, Grok—the AI tool responsible for the generated imagery—remained highly popular as of last Friday, January 2, ranking No. 4 in Apple’s App Store and No. 10 in Google’s [1].

Monetization as a Moderation Tactic

In an apparent attempt to mitigate the crisis, X adjusted Grok’s functionality on January 8, restricting image generation and editing capabilities to premium subscribers who pay $8 per month [1][5]. As of today, Friday, January 9, users attempting to alter images are met with a message stating that these features are “limited to paying subscribers” [5]. This move implies a strategy where financial barriers serve as a filter for abuse, a tactic that has drawn immediate criticism. While Elon Musk and X have reiterated that illegal content results in expulsion from the platform, the shift to a paid model for these features has not quelled concerns regarding the underlying safety of the technology [1][5].

Legislative Framework and Legal Risk

The controversy unfolds against the backdrop of the “Take It Down Act,” a bipartisan piece of legislation sponsored by Senators Amy Klobuchar and Ted Cruz, which President Donald Trump signed into law on May 19, 2025 [3]. While the act criminalizes the publication of nonconsensual intimate images, including deepfakes, its specific takedown provision—enforced by the FTC—is not scheduled to take effect until May 2026 [2]. Senator Cruz, speaking on Wednesday, January 7, asserted that the images generated by Grok are “unacceptable violations” of the law, though he expressed a “high level of confidence” that the issue would be resolved without direct intervention [3]. Conversely, Senator Klobuchar has taken a firmer stance, warning that if X does not change, the incoming legal requirements will force compliance [2].

Global Regulatory Pressure

While U.S. enforcement mechanisms are still coming online, international regulators are moving swiftly. The European Commission has ordered X to retain all internal documents and data related to Grok until the end of 2026 as part of a wider investigation under the EU’s digital safety laws [5]. Simultaneously, regulators in France, Malaysia, and India are scrutinizing the platform’s compliance with local safety standards [4][5]. With the British Prime Minister threatening unspecified action and stating that “all options are on the table,” the pressure on Apple and Google to act as gatekeepers is compounded by the threat of global regulatory fragmentation if X fails to implement effective controls [5].